From 1C Chaos to an Incremental DWH on Postgres, Airflow, and dbt

Original article on denvic.tech (in Russian)

Aleksandr Mazalov (Senior Data Engineer & Data Architect) shares a real-world case of migrating from a chaotic architecture built on 1C and KNIME to a modern incremental DWH on Postgres, Airflow, and dbt.

The Problem

The legacy architecture copied the entire 1C database to MS SQL Server daily — a 3–4 hour process that frequently crashed. KNIME required 40 GB of RAM, SQL scripts were overloaded with JOINs, and data lagged two days behind reality. The result: distrust in numbers, manual verification, and inconsistent metrics across teams.

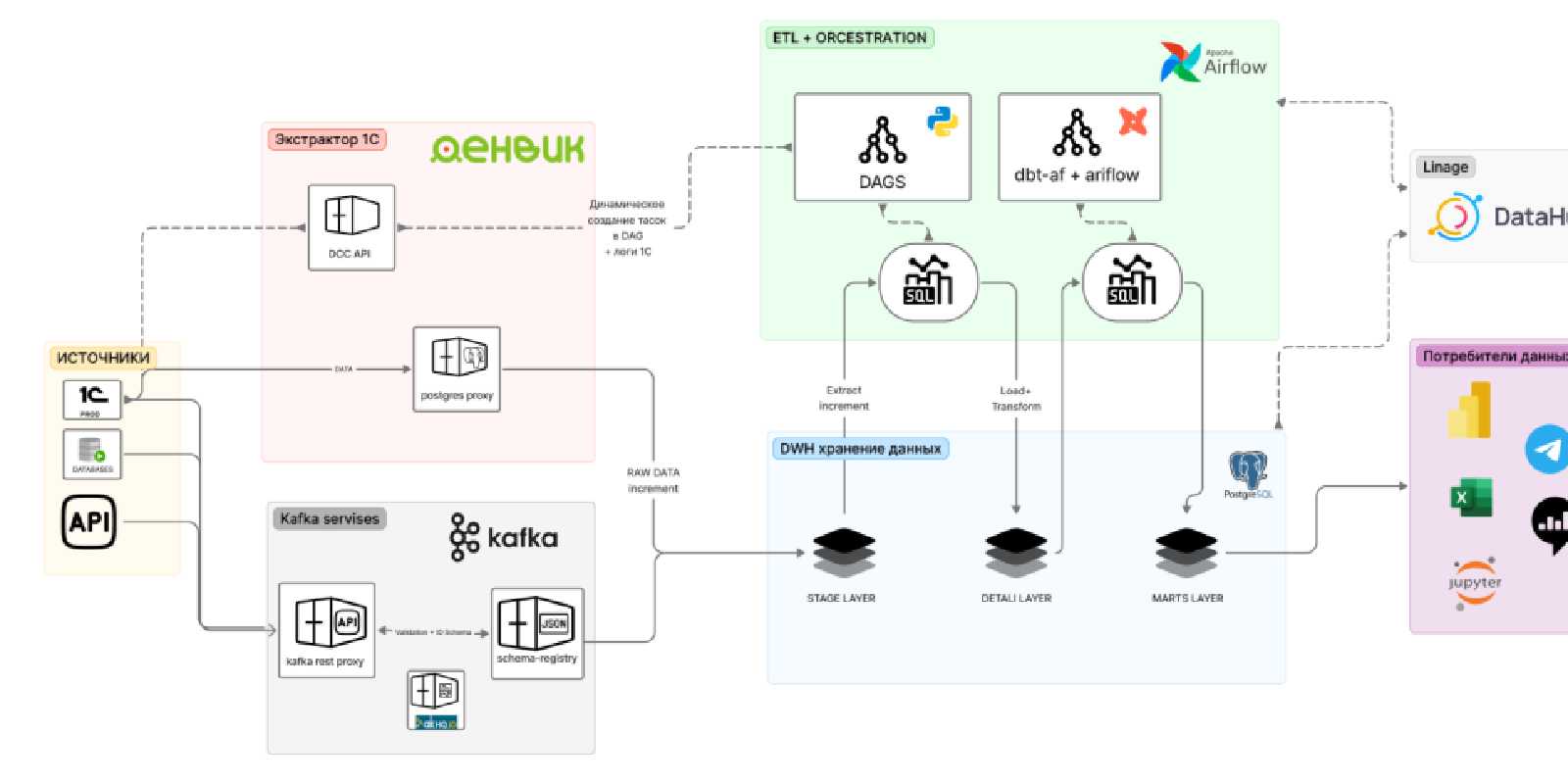

The Solution: Layered Architecture

Stage Layer

The “1C to BI Extractor” tool by Denvic exports only deltas (new and changed records), tracking even retroactive corrections in 1C. Minutes instead of hours.

DDS Layer (Airflow + PostgreSQL)

A single DAG manages updates to ~50 tables: unique IDs, data cleansing, and SCD2 for history tracking. CI/CD via GitLab with a two-tier environment (TEST in Docker → PROD).

Stock Processing (Kafka + Partitions)

300 million rows with retroactive changes and hourly update requirements. Kafka via Confluent buffers peak loads, while two Airflow DAGs process data in batches with dynamic date-based partitioning.

Data Marts (dbt-core + dbt-af)

Domain-structured models, DRY principle via macros, automatic DAG generation through dbt-af. Analysts independently develop data marts with data engineer review.

Results

| Before | After |

|---|---|

| 2-day-old data | Hourly updates |

| 40 GB RAM for full recalculation | Efficient resource usage |

| Days to build a new mart | 24 hours for a new mart |

| Manual verification | Automated dbt tests |

| Heavy load on 1C | Minimal load on 1C |

Tech Stack

PostgreSQL, Apache Airflow, dbt-core, dbt-af (Toloka/dmp-labs), Kafka (Confluent), GitLab CI/CD, Docker, Power BI Report Server, Yandex DataLens.

This case study is a great illustration of how Airflow + dbt with proper engineering discipline transforms chaos into a manageable data platform.